DeepSeek has released a new paper,Jerome Deeds Archives with co-founder Liang Wenfeng credited as a contributor, detailing how its latest large language model DeepSeek-V3 achieves efficient training and inference using only 2,048 H800 GPUs – significantly fewer than the tens of thousands typically required. The team attributes this efficiency to four key innovations: memory optimization through multi-head latent attention (MLA), computational savings via a Mixture-of-Experts (MoE) design with FP8 precision, communication improvements using a multi-plane network topology, and faster inference through multi-token prediction (MTP). With MLA, KV cache memory usage is cut to just 70KB per token, up to 1/7 that of competing models. MoE architecture activates only 37 billion of the model’s 671 billion parameters per forward pass, reducing training costs by 90% compared to dense models. FP8 training further halves compute and memory usage, with minimal accuracy tradeoff. Beyond the model, the paper also outlines five future directions for AI hardware design, advocating for tighter integration between software and hardware to address memory, compute, and networking bottlenecks. [36Kr, in Chinese]

SpaceX will try to achieve 2 impressive feats on Monday

SpaceX will try to achieve 2 impressive feats on Monday

Freedom Came in Cycles by Pamela Sneed

Freedom Came in Cycles by Pamela Sneed

The Myth of Self

The Myth of Self

Murder Most Foul by P. D. James

Murder Most Foul by P. D. James

Indiana Pacers vs. Boston Celtics 2024 livestream: Watch live

Indiana Pacers vs. Boston Celtics 2024 livestream: Watch live

A Collision with the Divine by Helen Macdonald

A Collision with the Divine by Helen Macdonald

The Myth of Self

The Myth of Self

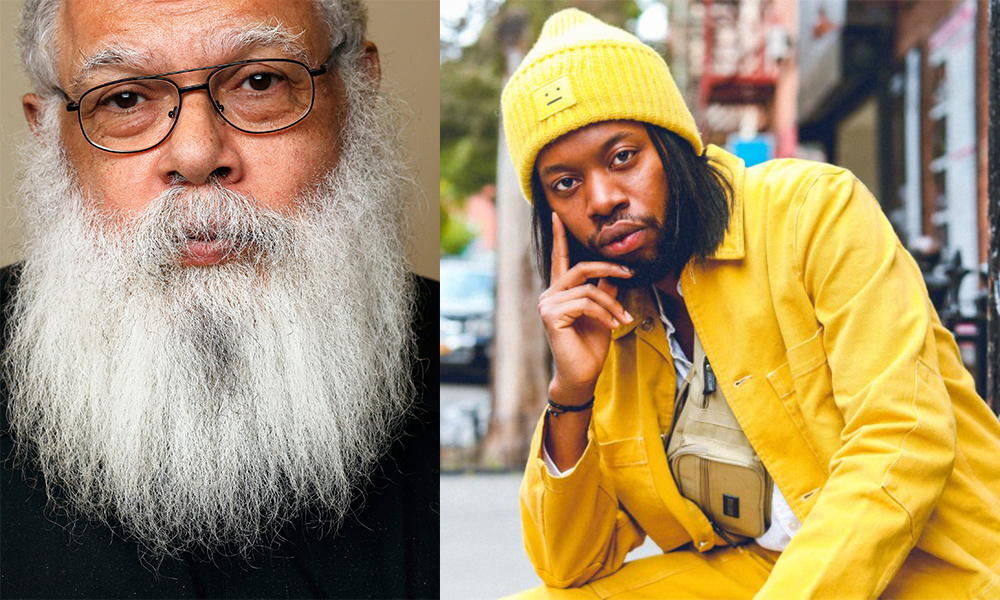

Sex in the Theater: Jeremy O. Harris and Samuel Delany in Conversation by Toniann Fernandez

Sex in the Theater: Jeremy O. Harris and Samuel Delany in Conversation by Toniann Fernandez

AI models don’t understand Gen Alpha slang

AI models don’t understand Gen Alpha slang

Cakes and Ale

Cakes and Ale

Miami Heat vs. Golden State Warriors 2025 livestream: Watch NBA online

Miami Heat vs. Golden State Warriors 2025 livestream: Watch NBA online

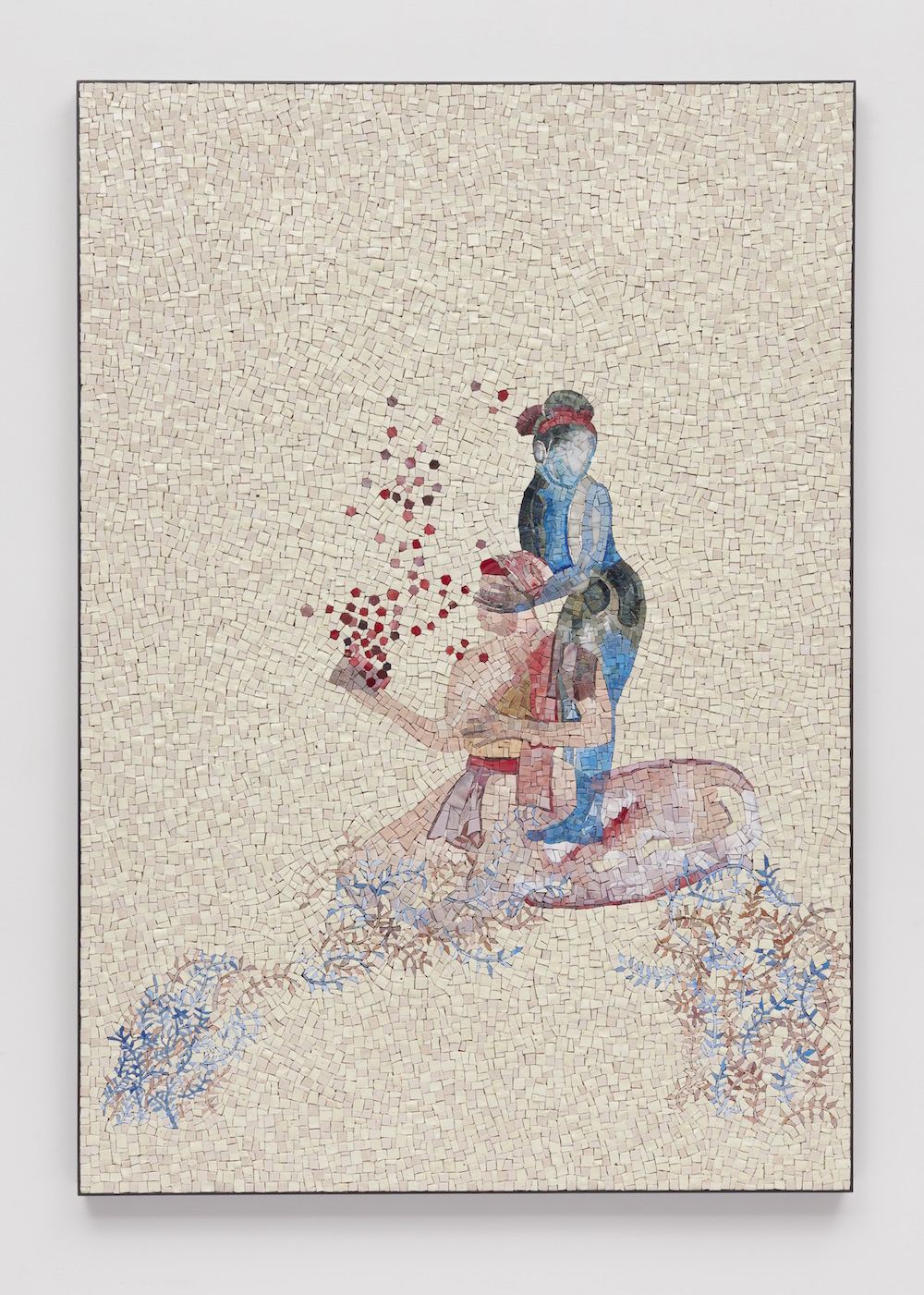

Venus and the Devata by The Paris Review

Venus and the Devata by The Paris Review

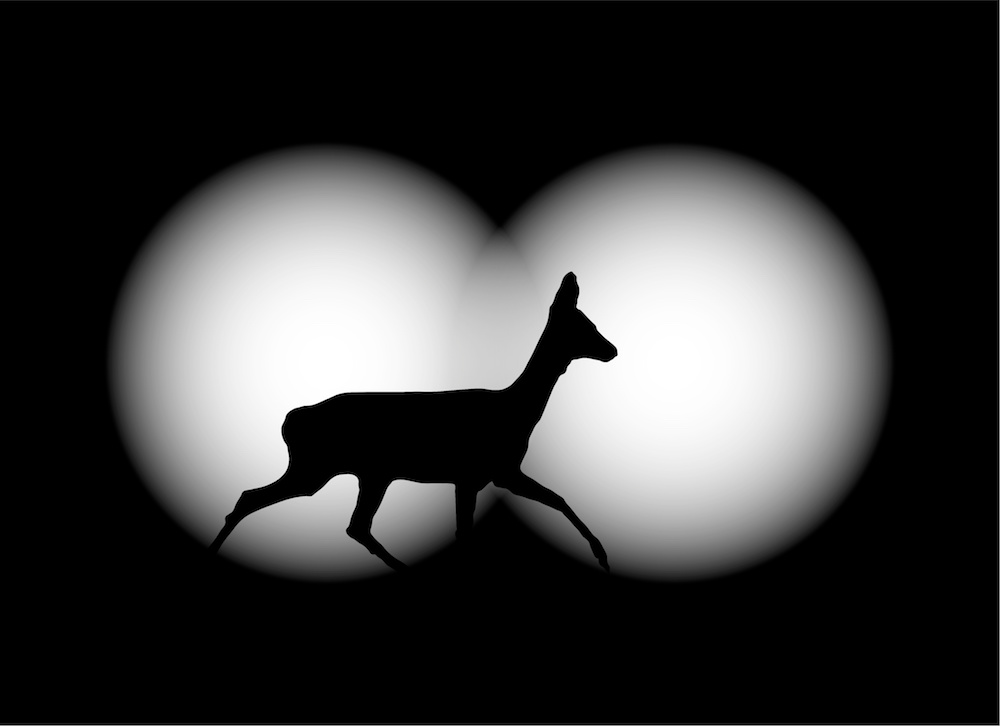

The Shadows below the Shadows

The Shadows below the Shadows

Murder Most Foul by P. D. James

Murder Most Foul by P. D. James

We'll always, er, sorta, have the Paris Climate Agreement

We'll always, er, sorta, have the Paris Climate Agreement

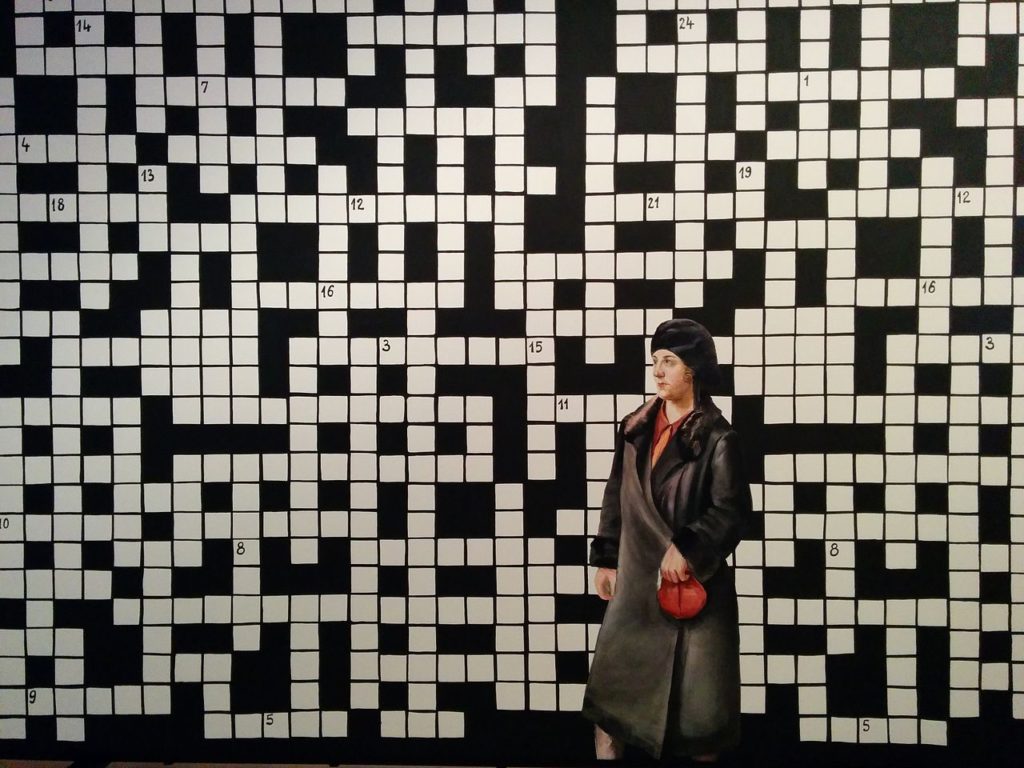

A Brief History of Word Games by Adrienne Raphel

A Brief History of Word Games by Adrienne Raphel

Murder Most Foul by P. D. James

Murder Most Foul by P. D. James

U Break It We Fix It by Sabrina Orah Mark

U Break It We Fix It by Sabrina Orah Mark

Reading the Artifacts After the Capitol Riot by Swati Rana

Reading the Artifacts After the Capitol Riot by Swati Rana

How Tastemade has eaten the internet with Facebook and Snapchat'Persona' spins off with two rhythm games and a new 3DS gameThe LG V30 will have a huge, 6The LG V30 will have a huge, 6Facebook is turning up the heat on media companies yet againGeorge and Amal Clooney to help 3,000 Syrian refugee children go to schoolI'm Littlefinger from 'Game of Thrones' and I'm weird as shitThe Reese's Peanut Butter Doughnut is about to hit Krispy KremeMicrosoft just dropped three cool new Xbox One controllersAugust is a great month for skywatching: How to make the most of itBefore Kim Kardashian, there was Angelyne. Now her identity has been revealed.Clothing brands are making dedicated AirPod pockets now, if that makes sense to youInstagram Stories is 1 year old and still dominatingStar Trek: Discovery: Michael Burnham's connection to Spock and Sarek explained'Confederate' isn't the only postFacebook's smart speaker for video calling sounds really creepyHow Tastemade has eaten the internet with Facebook and SnapchatLatest 'Final Fantasy XV' update lets you dress up like an invincible badassMophie finally made a battery that can charge a laptopAmazon Jobs Day is bleak as hell Peloton will reportedly halt making... basically everything, including all its bikes and treads ChatGPT rolls out voice and image capabilities Tatiana Trouvé’s “Desire Lines” Finds Art in Central Park 'The Office' reboot is a good idea — if Michael, Jim, Dwight, and Pam aren't in it The Sound of Sound: Two Remembrances of Ornette Coleman Juan Felipe Herrera and Tomato Spotify's new Jam feature will let you listen to shared playlists in real time Shrek's swamp is coming to Airbnb In My Copious Free Time... TikTok is making 'Euphoria' fanfiction now On Taylor Swift’s Passive Cuteness for Fun and Profit Spotify pilots AI voice translation for podcasts Redditors can earn real money for good posts now How Psychoanalysis Helped John Berryman’s Poetry Remembering James Salter: On His Essay “The Skiing Life” The Treasure Maps of Pamela Singh Having Trouble Sleeping? Read the Ultimate Insomnia Cure. Best Echo deal: The Amazon Echo Pop is 70% off plus a month of Amazon Music Unlimited Richard McGuire on “Here,” His Groundbreaking Graphic Novel

3.0148s , 10098.3125 kb

Copyright © 2025 Powered by 【Jerome Deeds Archives】,Wisdom Convergence Information Network